Ever since the Play framework came onto the scene, I’ve been sold on the idea of containerless deployment. The old model of a bunch of apps deployed in a single container sharing resources and Java EE components just never really materialized. Thanks to the thriving Grails plugin community, we have the Standalone App Runner plugin that makes containerless deployment dead simple for Grails apps. But what would you be giving up in terms of performance if you move away from a commercial enterprise-y app server like WebLogic in favor of embedded Tomcat or Jetty? As it turns out, absolutely nothing.

The Test

I ran a simple benchmark to compare the performance of the same Grails app deployed on Weblogic, embedded Tomcat, and embedded Jetty (both via the Standalone App Runner plugin, version 1.1.1). The app is a simple stateless REST service that returns a JSON payload representing an order from the default Grails embedded H2 in-memory database. Each test consisted of a batch of 20K requests, and all tests were run with 10, 20, 40, and 80 threads in JMeter with no wait time between requests. Each test set was run 10 times to get an average.

The Setup

Each version of the app was deployed on the same physical server (a Dell something or other running Solaris…) with WebLogic 10.6.3 installed. A single instance of each container was run. Load was generated from a MacBook Pro running JMeter. During the test the server never got above 70% CPU utilization, and the load generator never got above 80% utilization.

The Results

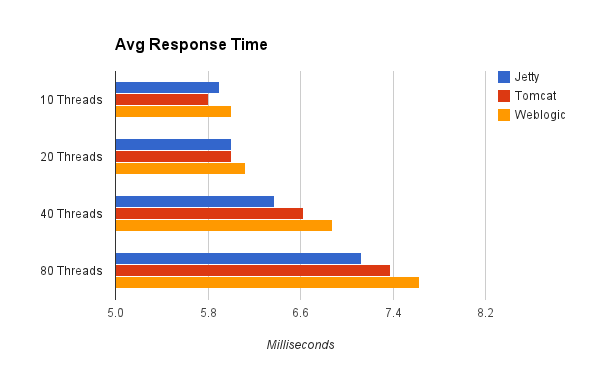

Response Time

The above chart shows raw response time as recorded from JMeter for a GET request to the service (shorter bars indicate better performance). Interestingly, both Jetty and Tomcat outperformed Weblogic across the board. At 10 threads, Tomcat had the fastest response times. At 20 threads, Tomcat and Jetty were even ahead of WebLogic. At 40 and 80 threads, Jetty was the clear leader followed by Tomcat and Weblogic. So, it seems there’s something to the argument that a stripped-down, lightweight servlet container should be able to outperform a bloated full-featured commercial server like Weblogic.

Throughput

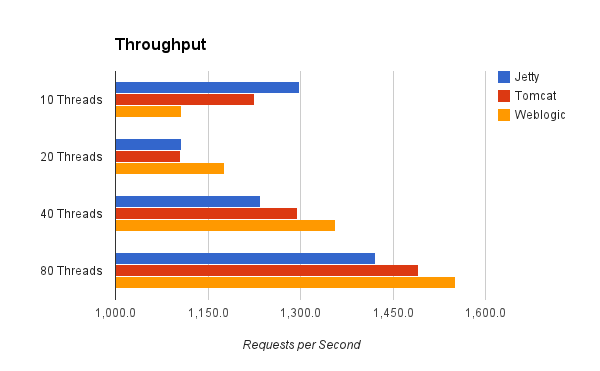

It seems logical that a super lightweight server like Jetty should be faster than Weblogic in terms of raw speed, but what about throughput? Here the numbers tell a slightly different story.

The chart above shows average throughput in requests per second (longer bars are better). At lower levels of concurrency, both Tomcat and Jetty were able to churn through more requests per second than Weblogic, with Jetty showing a clear lead at 10 threads. However, at both 40 and 80 threads, Weblogic gained an edge followed by Tomcat and then Jetty. This seems to suggest that perhaps the Weblogic’s thread management mechanism was optimized for throughput at the expense of raw speed – a logical design choice for an enterprise product like Weblogic.

The Data

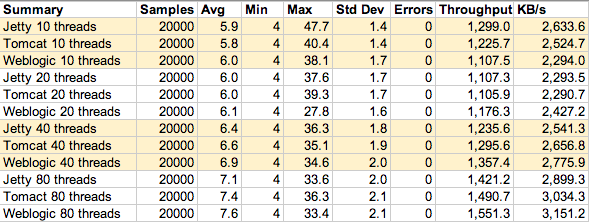

The table above shows a summary of the raw data across all of the test runs. It’s worth noting that in the hundreds of thousands of highly concurrent requests run during the course of this test not a single one resulted in an error. This was by no means a long-duration torture test, but it does show that there were no obvious differences in reliability between the containerless options tested and Weblogic.

Conclusion

In my opinion, the arguments for going containerless speak for themselves. What could be more simple from an operations standpoint than dropping a jar file on a bare OS and running it – no server installation or configuration to bother with. The purpose of this test was to see whether you would be sacrificing something significant in moving to the containerless model from a more traditional enterprise deployment model. The answer is a resounding no. In raw speed tests, the containerless model outperformed Weblogic across the board. In throughput tests, Weblogic claimed an edge at higher levels of concurrency. However, keep in mind that the margins involved in declaring victory for any server over the others were tiny in all cases. Realistically, you would probably never notice a difference in performance between the deployment scenarios tested. And that was the point. I, like many people steeped in “enterprise” culture, held the illogical belief that Weblogic, a big commercial product supported by practically unlimited resources, just had to perform significantly better (think 2x) than a simple embedded servlet container. Luckily, that just isn’t the case.

11 Responses to “Containerless Deployment Performance Showdown”

Hey

Nice article. One question from my side. What about stuff like lambdaprobe? How to embedded the into the containerless setup?

Good question. I haven’t had experience with Lambdaprobe, but there’s a grails plugin for JavaMelody which should give you the same functionality (http://grails.org/plugin/grails-melody). Because JavaMelody runs as part of your app and not as a standalone app or plugin to Tomcat, it should lend itself well to embedded deployment.

In an enterprise setting, if your ops group uses a commercial monitoring solution (like HP open view) it should have no trouble plugging into your JVM instances via JMX.

Hi Paul,

your blog post wants to make the point between container vs container-less deployment and what you compare is the speed. Frankly I don’t understand why a deployment option should influence the speed. Take Jetty or even Tomcat both can be deployed in a container-like installation or be embedded. The speed and throughput won’t change by changing the deployment option. The container vs container-less discussion is more around deployment agility and operation ease.

In reality what you are comparing is Jetty vs Tomcat vs Weblogic performance.

In this case the above test need to be revisited:

Firstly the test methodology isn’t appropriate. You are using JMeter that is a synchronous tool that means at any point in time the maximum number of requests you’ll be sending will be (at most) the number or running threads in JMETER. Yet, you have to make sure that server side Jetty, Tomcat and Weblogic they have the exact same number of MAX_THREAD (between them) for the connection pool.

If server side the number of the threads are greater than jmeter threads, then you have a number of threads doing nothing. If the number of threads on server side is less than jmeter, then requests will start to queue up. And here we have different queues. We have the thread pool queues, we have the TCP backlog queue etc.

There are a number of config change to make to your system when load testing please see this for reference (http://wiki.eclipse.org/Jetty/Howto/High_Load).

Additionally as load tool you can’t use a synchronous tools such JMeter otherwise while one request is pending, the thread will be blocked waiting for a response. So the maximum request that you can process will be limited by req_x_sec = 1000 millis / avg_req_time_in_millis * n_threads. An asynchronous load tool such Gatling (https://github.com/excilys/gatling) gives better results.

Finally which IO implementation of Jetty and Tomcat did you use? NIO or Basic IO? Jetty trades the throughput for less latency so I’m not surprised that this appeared in your benchmarch.

Read here http://webtide.intalio.com/2010/06/lies-damned-lies-and-benchmarks-2/ more about the throughput benchmarks and their limitations.

In conclusion, I would decide on container vs container-less solution more for process/deployment reasons, certainly not for performance reasons. The point about performance then is a very complicate matter that needs to be carefully evaluated before to make assertions. OS, network, hardware and software needs to be finely tuned to play well together when pushing performances limit. I’m sure you’ll find in above links interesting reading to expand your test and have a more comprehensive test suite.

regards

Bruno

on December 17th, 2012 at 9:44 pm #

[…] Containerless Deployment Performance Showdown […]

You are doing a great job showing the real value and compelling for enterprise features in IT shops, blow out “enterprise-y” misconceptions.

I think this counter culture came with the failure of bad APIs of Enterprise Edition of Java, like EJB2. But the community really “throwed the baby with the bathwater”.

The best tools and methods should be smart enough to adapt to many contexts as possible out-of-the-box.

Please, keep up the great work.

Best regards.

The truth in some IT shops is that many stepped out from enterprise containers thinking they are bloated and certainly 2x slower based on false assumptions from startup times.

From Ops view, EE containers is a lot easier to managing and monitoring out-of-the-box, but Devs suffered a lot in these deployment lifecycle model. There are many IT shops that just “Dev” and don’t “Ops”. Enterprise IT’s have their Ops to manage and monitor many high volume access and mission-critical apps. There are cases where some rapid deploy lifecycle appears to be slow when Devs didn’t understand some security concerns in mission critical contexts.

But nowadays with DevOps model and primary because hot-deploy capabilities in development tools like Grails, many of this “productivity breakers” are mitigated.

I guess it somewhat depends on the nature of the corporation. In our particular case the Ops do manage J2EE application servers. It is partially dictated by PCI compliance requirements of separate roles that effectively prevent true DevOps adoption. The Ops nevertheless seem very excited about the prospect of standalone deployment because it removes the J2EE container layer away from their sphere of control (in terms of installation, configuration and management). In their eyes the whole application deployment could become as simple as running a startup script. Which apparently makes a big difference for them.

2qslyde

451q7d

jy1p33x6

m58b25h0